What Happens When Police Misuse Facial Recognition Software?

Facial Recognition and Public Safety

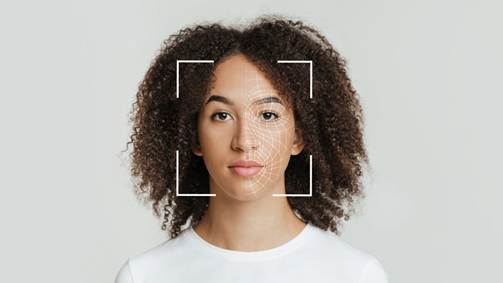

Facial recognition software has become a tool many law enforcement agencies across New York State now rely on. It’s been used to track down suspects, match faces to security footage, and monitor heavily trafficked areas like the subway system. Supporters claim it improves public safety. But growing concerns about bias, misidentification, and a lack of human oversight continue to rise.

When police misuse facial recognition software, the damage hits fast and cuts deep. Wrongful arrests, public embarrassment, and real harm to innocent people are all real consequences. At Horn Wright, LLP, our civil rights attorneys represent New Yorkers whose lives have been shaken by this kind of error. If facial recognition played a role in your false arrest or rights violation, our attorneys are here to help you fight back.

How Law Enforcement Uses Facial Recognition in New York

Police departments in New York aren’t shy about embracing new technology. Over the past decade, facial recognition tools have quietly become part of routine investigations. The NYPD, in particular, has used the software since at least 2011, pulling data from a massive pool of images that includes mugshots, DMV records, social media photos, and security cameras.

While facial recognition use isn’t always visible to the public, it touches many corners of daily life across the state. In places like Manhattan, Queens, and even upstate cities like Syracuse, the software has been deployed in high-traffic areas such as subway stations and public plazas. Law enforcement may not always tell the public when or how it’s being used, but make no mistake, it’s watching.

Some police departments:

- Scan video footage from security cameras and match it with DMV images

- Use software during live monitoring of protests and public gatherings

- Rely on third-party vendors like Clearview AI, which scrapes images from the internet

All of this happens with little outside review, making it difficult to know when facial recognition crosses the line.

When Facial Recognition Gets It Wrong

Facial recognition software often struggles in real-world settings. Research shows it misidentifies people of color at higher rates, particularly Black, Latino, and Asian individuals. While it may perform well in controlled environments, it’s less reliable on a dark Bronx street or in fast-moving situations in Brooklyn.

The software can suggest a match based on blurry footage or similar features. That resemblance, even if weak, can trigger an arrest if officers don’t verify it further.

There have already been high-profile incidents across the country where innocent people were jailed after faulty facial recognition matches. And in New York, the risk is just as real.

If police rely too heavily on this software:

- Officers may make an arrest based on a single photo match

- Suspects could be charged before any further investigation is done

- Eyewitnesses may be influenced by the match, reinforcing the error

Technology is only as good as the people using it. When that tech goes unchallenged, mistakes turn into injustice.

Real-World Impact: Wrongful Arrests and Legal Fallout

Being wrongly arrested is traumatic. It doesn’t matter how quickly charges are dropped or if you’re released within hours. Once the damage is done, it sticks. For New Yorkers caught in the crosshairs of misused facial recognition, that damage often includes public humiliation, lost wages, legal fees, and long-term emotional distress.

You may find yourself sitting in a holding cell, trying to convince a stranger you’ve never been near the scene of the crime. Maybe your family’s worried sick or your job wants answers you can’t provide. In those moments, facial recognition errors stop feeling like a tech glitch and become a personal nightmare.

Across New York State, wrongful arrests based on false matches have led to:

- Arrest records that follow innocent people for years

- Family strain, especially when children witness a parent taken into custody

- Job loss or professional setbacks due to time missed or background checks

- Lingering mental health issues like anxiety and depression

People often don’t realize they were misidentified by facial recognition until much later. By that time, they may have already spent days in custody or made court appearances. The legal system doesn’t move fast, but the impact is immediate.

You may be able to pursue compensation under New York’s civil rights law if your arrest resulted from a flawed facial recognition match.

Legal Oversight: Where the System Breaks Down

For all its risks, facial recognition technology remains largely unregulated across New York. No uniform policy exists to guide how police departments should or shouldn’t use it. Instead, local agencies develop their own standards, or sometimes operate without any at all. This inconsistent approach leaves many New Yorkers unsure of their rights when a misidentification occurs.

The NYPD’s internal policy states that facial recognition matches are intended to serve only as investigative leads, not definitive proof. In practice, though, officers may treat the match as conclusive and move forward without proper verification. Supervisors might approve arrests based solely on software outputs, even when evidence is weak.

Legislative gaps that increase the risk of misuse include:

- No required audits of how facial recognition tools are used in individual cases

- No law mandating that police disclose the use of facial recognition during arrest

- Weak oversight boards that lack authority to enforce compliance

Although efforts such as the Facial Recognition Ban in Schools bill reflect growing awareness, progress has stalled. As a result, individuals harmed by false matches are often left to prove police error on their own.

Community Pushback and Calls for Reform

Across New York, people aren’t staying quiet. In Brooklyn, civil rights groups have marched against overreach in digital surveillance. In Rochester, community organizers have demanded audits of police tech programs. And in Albany, lawmakers continue to debate how far is too far when it comes to artificial intelligence in law enforcement.

Advocates are asking for more than transparency. They want guardrails. That includes bans on real-time facial recognition, better reporting of false matches, and strict penalties for misuse. Some want a full stop with no facial recognition in public spaces at all.

Key concerns voiced by community groups include:

- Unfair targeting of specific neighborhoods with heavy camera coverage

- Lack of consent or notification before being scanned

- The chilling effect on free expression and protest activity

New Yorkers value safety, but not at the expense of fairness. The pushback is about dignity, equal protection, and keeping the government accountable.

Some protests in Rochester were sparked by issues around surveillance and force, including the public release of body camera footage and the termination of the police chief.

Your Rights if You’re Misidentified in New York

If you’ve been wrongly accused, arrested, or questioned because of a facial recognition error, you still have rights under New York law. These rights protect you when law enforcement relies on flawed technology or fails to verify software-driven leads. Whether the error resulted from a faulty match or police overreach, you can take action to defend yourself and pursue accountability.

Steps you can take include:

- Ask for all evidence tied to your arrest, including the facial recognition match

- File a complaint with the Civilian Complaint Review Board (CCRB) in New York City, or the New York State Attorney General’s Civil Rights Bureau

- Speak with an experienced civil rights attorney who handles false arrest and police misconduct cases

- Request an immediate review of surveillance footage, if applicable

- Keep a detailed record of every interaction, document, and communication about your case

If your rights were violated and the arrest caused lasting harm, you may have legal grounds to pursue a lawsuit for damages and correction of your record.

Stay Informed, Know Your Rights

Facial recognition software isn’t science fiction. It’s already in use across New York State. And while it can help solve crimes, its unchecked use by law enforcement has created serious problems for innocent people. If you were arrested or harmed because of a bad match, it’s important to act quickly, gather information, and explore your legal options.

At Horn Wright, LLP, we represent individuals across New York who’ve been misidentified, mistreated, or wrongly arrested because of police misuse of technology. We’re here to help you seek justice, protect your rights, and take that weight off your shoulders.

What Sets Us Apart From The Rest?

Horn Wright, LLP is here to help you get the results you need with a team you can trust.

-

Client-Focused ApproachWe’re a client-centered, results-oriented firm. When you work with us, you can have confidence we’ll put your best interests at the forefront of your case – it’s that simple.

-

Creative & Innovative Solutions

No two cases are the same, and neither are their solutions. Our attorneys provide creative points of view to yield exemplary results.

-

Experienced Attorneys

We have a team of trusted and respected attorneys to ensure your case is matched with the best attorney possible.

-

Driven By Justice

The core of our legal practice is our commitment to obtaining justice for those who have been wronged and need a powerful voice.